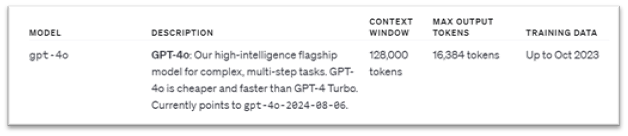

Let us look at the above image, we see there are two important terms mentioned

- Context window

- Max output token

At first glance, these might sound like technical jargon, but don’t worry—they’re simple concepts that make a big difference in how these models work. Let’s dive in!

Context: What Can the Model “See”? 👀

Before we get to the specifics, let’s start with context. Imagine you’re having a conversation or reading a long document—what if you could only remember a few words from the beginning of the conversation? That would make it hard to follow, right? In the same way, context refers to all the text the model can “see” or consider when generating its next word or response.

What’s a Token?

Now, what about tokens? Think of tokens as little chunks of text. A token could be as small as a single character or as big as a full word or punctuation mark.

Example

Sentence: “GPT-4o is powerful” could be split into 5 tokens:

Tokens:

“GPT”

“-“

“4o”

“is”

“powerful”

Context Window: How Much Can the Model “Remember”? 🧠

Here’s where the context window comes in. The context window is basically the size of the “memory” the model has while it processes or generates text. It determines how much information (in tokens) the model can handle at once.

In the example above, GPT-4o has a context window of 128,000 tokens. That means the model can process or “remember” up to 128,000 tokens at a time. This huge window allows the model to handle long conversations, lengthy documents, or complex tasks that require tracking a lot of information over multiple steps.

Max Output Tokens: How Much Can the Model Generate at Once?

Next up is max output tokens, which is pretty much what it sounds like—the maximum number of tokens the model can generate in a single response.

For GPT-4o, the max output is 16,384 tokens. Even if the model’s context is packed with information from a 128,000-token input, the most it can respond with in one go is 16,384 tokens.

Understanding these terms helps you see how a model like GPT-4o handles large tasks, keeps track of context, and generates useful, coherent responses!